PilotStudy-Group:Orquesta-VedranPogacnik

From CS 160 Fall 2008

Contents |

Introduction

- Introduce the system being evaluated

Orquesta is a serious game aimed at DJ’s of all skill-levels, which provides the player with a mean of practicing his DJ beatmixing skills by combining different instruments’ “tracks” into a composition. A “track” is a short recording of a particular instrument’s sound. When a track is playing (indicated by the corresponding dial pointing to “on”), the sound is continuously outputted, effectively making a smooth (unbroken) sound of that instrument. The player combines instruments and transitions between them in practice and campaign modes. The campaign mode presents the player with well-defined objectives. Completing the objectives in given time accumulates points and enables progress onto the next level. The practice mode of the game provides the user with a mixing environment without rules, objectives, scoring system, or time limit. That enables the player to compose a song however he wants.

- State the purpose and rationale of the experiment

The purpose of this usability test is to evaluate the Orquesta game by testing the game-play with a user (testee), in order identify problem areas and determine the fit of the design to the target users. The testee will complete three tasks, which are designed to encompass most of the game’s functionality. Since previous versions of the game, significant improvement has been made to the interface, some functionality added and some discarded, which changed the tasks that the player needed to complete. After the testing has been performed, recommendations will be made for fixing and improving the design.

Implementation and Improvements

- Describe all the functionality you have implemented and/or improved since submitting your interactive prototype.

Discarded features

- Mix Saving & Importing: Discarded because we wanted to focus more on making Orquesta more of a game than an application with a scoring system, what it has been until now. Also, it became apparent that saving or importing mixes is not crucial in improving the player’s DJ’ing skills, which is the purpose of Orquesta.

- Quit: The quit button didn’t provide any added functionality that a window’s “X” (on Microsoft Windows in upper right corner of the window) provided, so there was no benefit to having it.

Added features

- Level Selection: In campaign mode the user now has the option to choose which level he wants to play. That came about because it was never intuitively clear how the player saves progress should he want to come back to the game at a later point. Having the Save Mix feature removed altogether, the user doesn’t need to save his progress, but instead selects which level he wants to play. Presumably, the higher the level, the harder the objectives. NOTE: for now only Level 1 implemented. This addition improves the "Flexibility and Efficiency of Use" heuristic.

- Tutorial Navigation: Orquesta Tutorial was single-directional- the player could only go forward, instead of revisiting the previous screens and familiarizing himself with that particular portion of the game. Now, each tutorial screen has a “Previous” button that reverts to one slide backwards in the tutorial. The navigation controls are clearly pointed out in the first page of the tutorial. This addition improves the "Flexibility and Efficiency of Use" heuristic.

- In-Game Visual Feedback: In order to make the game more intuitive and to help the user prevent or recover from errors, we made significant changes. For example, there is now a visual support for the concept of “beat”, designed to help player make transitions on-beat. Since only Campaign Mode has the on-beat restriction, it is only present in that mode. That visual feedback, explained in the tutorial mode, is implemented in the form of an on-beat flashing frame around the instruments’ labels. This addition improves the "Recognition Rather than Recall" and "Help Users Recognize, Diagnose, and Recover from Errors" heuristics.

- In-Game Audio Feedback: When the user makes a transition that if off-beat a negative (annoying) sound is played and the vinyl (strike) is broken. Negative sound is much shorter than a track and hence shouldn’t significantly disturb the mix’s sound and hinder the game-play experience. This addition improves the "Help Users Recognize [...] Errors" and "Match System and Real World" heuristics.

- Power-ups:In Campaign Mode the user will be able to receive power-ups, such as a x2 Points Multiplier. That particular multiplier rewards the number of consecutive transitions made on-beat, and is activated at three successful consecutive transitions. Upon activation, a clearly visible pop-up message appears, informing the user which power-up he just acquired. NOTE: for now only 2x Points Multiplier power-up implemented. This addition improves the "Visibility of System Status" heuristic.

- Miscellaneous Clarifications: There were various areas in the game that could have been explained better. We tried not to saturate the interface with text, so we minimized the amount of text from the previous iteration. Following the addition of new functionalities, we added some clarifications at various points of our game. We also made some labels and the background more appealing to the eye, in order to maximize the player’s interest. This addition improves the "Visibility of System Status", "Recognition Rather than Recall", "Consistency and Standards", and "Error prevention" heuristics.

- Bug Fixes: There were a lot of bugs in our game from the interactive presentation. We cleaned up as many as possible, although later iterations might reveal some other that we need to fix.

Method

Participant

- (who -- demographics -- and how were they selected)

The testee was a female, 22 years old, currently pursuing her Bachelor’s degree in Business Management at San Jose State University. She was selected because she has not yet seen the Orquesta game, so the game and its purpose are new to her. Moreover, she doesn’t have experience in making music nor gaming of any kind, but she is interested in making her own songs. As she put it, the only problem is that (as far as she knows) equipment for making customized songs is way out of her price range. Therefore, she is a suitable representative of our target user group. As a plus, she is very accustomed to working on a computer across a wide range of different applications.

Apparatus

- (describe the equipment you used and where)

To focus her attention solely on the Orquesta game and its interface (and not on the new computer), Orquesta game was run in Flash on her own laptop, to which she is well accustomed to. As far as the details of the laptop go, her laptop is a Dell Inspiron 700m, with Windows XP operating system. The usability evaluation session was performed in an empty classroom- to minimize the outside noise, on San Jose State campus- to make sure that the setting is one that she is well-used to, again to make her focus on the game and not on the new surrounding.

Tasks

- [you should have this already from previous assignments, but you may wish to revise it] describe each task and what you looked for when those tasks were performed

During the testee’s performance of the tasks, I looked for the way she interprets in-game actions, interface elements, and the resultant logic.

The three tasks cover all features of the Orquesta game. In order they are:

Complete Tutorial (EASY):

The tutorial mode explains the basics of the game and introduces the player to the functionality available in the Orquesta game. The tutorial outlines instrument selection, transition making, power-up acquisition, timer constraint, and level control (stop mix and restart).

Obtain Power-up (HARD):

An important part of any game is a power-up, to increase player’s interest. Instructions how to obtain the power-up are clearly stated in campaign mode before level start: Three consecutive on-beat transitions will initiate a x2 point multiplier for every subsequent successful transition.

Complete Level 1 (MEDIUM):

As clearly stated in the beginning of the level, in order to complete it, a user must successfully complete all objectives (for now the only objectives are transitions). Completing a level displays the Results Screen, showing the statistics for that level. A table shows a list of objectives, their completion times (if completed successfully), points awarded, and completion status of each objective.

Procedure

- Describe what you did and how

After the greeting, the testee was given overview of how this usability study is going to happen. She is informed that she can stop this study at any time should she feel uncomfortable in any way. She is told that the purpose of the usability study is to “evaluate the interface” (no focusing on any particular criteria), and that I need her to verbalize her actions in game. She is instructed to think aloud about the actions she is doing.

The testee is not told what the game is about nor any specifics about how things work, but that one of the aspects of this usability study is for her to figure out what that is. She is told that she will be completing three in-game tasks that encompass all the game’s features. She is given high-level instructions (e.g. “complete level 1 in campaign mode”).

After each action that she performed (successfully or unsuccessfully), if her “thinking out loud” didn’t reveal the degree of information what would help in evaluating the interface and the game, she was prompted with questions that vary in levels of detail.

Going into the first task- completing the tutorial mode, she is asked several questions about the feel of the tutorial. Those questions that I asked were aimed at determining how intuitive was it to reach certain parts of the game or fulfill certain requirements. The questions asked during the execution of the first task were for the most part general, because the tutorial is a very structured sequence of operations, where there are pointers (arrows literally) pointing to the thing the testee should click next. The level of detail of most questions is something like: “do you think there is too much text on at any point of the tutorial?” Following the completion of the first task, the testee was asked for her suggestions, comments, and particularly what she liked or disliked during the tutorial.

For the second task the testee was instructed to “acquire a power-up in the campaign mode”. She was asked a lot of specific questions that do not suggest the answer (e.g. “why do you think this happened?”). She was asked about what she expected a certain action to do before she performed an action. The questions for this task were focused on logic of the game (e.g. “how do you think you acquire a power-up?”). Follow up questions were asked if the expected action didn’t match the resulting one (e.g. “what do you think happened at this point? Why?”).

Again, a high-level description was given for the third task- “completing level 1 in the campaign mode”. The questions for this task were aimed at learning about the user’s understanding of the game, objectives of a level, in-game navigation, and the intuitive-ness overall and of specific actions (e.g. “how would you expect to perform this action?”, or “what would you expect this button to do?”).

Test Measures

- Describe what you measured and why

| Measured | Why? |

|---|---|

| Amount of time it took for the testee to complete each task. | To see which time the user spends the most time on. To determine the degree of clarity and difficulty of each task. With these results one needs to take into account the number of errors made, described below. |

| Amount of time the testee to learn how to perform a particular goal (both out-of-game and in-game goals). | To determine intuitiveness of each goal and to determine retention over time. That indicates how intuitive the interface and in-game tasks are based on requiring more recognition or recall. Also, to rank the severity of usability problems- the greater the time, the bigger the severity. |

| Number of times when a user was trying to find something (e.g. button) but couldn’t. | To reveal functionality / shortcuts that the testee expects to find. |

| Number of errors made per task, especially off-beat transitions as well as other mistakes and lapses of game actions. | To ensure the sequence of events in the game is intuitive and with smooth logical transitions. The assumption is that a task with more errors is has an un-intuitive logical sequence. |

| Events of any verbal or non-verbal remarks- critical incidents (e.g. sounds of confusion, gestures, subjective satisfaction, etc.). | To isolate points of confusion and interface inconsistencies. Each remark was documented, and its cause noted (see Raw Data section). |

Results

- Results of the tests

In this usability test dependant variables were number of lapses, mistakes, time required to perform some action, and the number of critical incidents per task. Comtrol variables were the type of computer used, its operating system, the Flash environment version. Those don’t have an effect on the dependant variables. Independent variables were the particular version of the Orquesta game. With each iteration comes a new version, whose functionality might differ from the previous version.

The following measurements of dependant variables were conducted:

- Amount of time it took for the testee to complete each task:

The time it took the testee to finish the tasks are, respectively [min:sec]: 0:27, 1:03, (2x)1:30 (first attempt unsuccessful). However, post-test questions noted that the user stumbled upon the solution to tasks 1 and 2 by accident, revealing severe mistakes in understanding. Therefore, the measured times should be discarded since they bear no significance.

- Number of events when a user was trying to find something (e.g. button) but couldn’t:

At no point of the game was the testee stuck trying to find something that he couldn’t.

- Amount of time it took the testee to learn how to perform a particular goal:

Out-of-game tasks: getting to different modes of the game and instrument selection in the campaign mode were performed in 7 seconds. In-game tasks: time it took to learn where to click in order to perform a transition was 15 seconds in the first go through the campaign mode. For the second attempt, the testee referred to the tutorial, and the time it took to learn where to click was to 4 seconds.

- Errors made per task:

There were a number of mistakes made. For each task there were 0, 2, and 3 knowledge based mistakes made, and 3, 2, 4 rule based mistakes, respectively. Also, there were 3, 7, 2 lapses per task respectively. As an example of rule-based mistake, the testee clicked on the wrong area when trying to make a transition. Examples of knowledge-based mistake was transitioning off-beat, and examples of lapses were skipping parts of the tutorial and being confused about the functionality of the following “slide” of the tutorial.

- Events of any verbal or non-verbal remarks- critical incidents:

Critical incidents in the raw data section refer to only the “During-task” subsections. “Pre- and Post-task remarks do not interfere with the natural flow of the task itself, and were hence counted separately from the critical incidents. Note that some critical incidents are repeated.

The first task saw 6 critical incidents. In addition there were a lot of critical incidents, 5 and 4 respectively, for the second and third tasks. On multiple actions, the user was confused about the layout of the interface. For example, for the flashing glow around the instrument (intended to represent the beat for making transitions), it wasn’t clear what the purpose of it was.

The scoring system was just “grading”, and not helping the user recover from her mistakes (making the transition off-beat). She made no connection between the score, the vinyls (intended to be “strikes”), and the objectives. The confusion about objectives was due to the fact that the objectives are stated before the level, and never again. Later, they are only indexed (as in “objective #1”). Also, there was confusion about whether adjusting the volume would have any affect on the points. That hasn’t been stated anywhere in our game.

Finally, before the start of level 1 of the campaign mode, the testee didn’t make the connection that the instruments she put do in fact produce different sounds. The campaign mode gives a choice of seven instruments, three of which can be used at a time. The testee didn’t resolve that the tracks she was hearing actually correspond to the instruments she previously selected.

The summary of critical incidents is available in the raw data section of this report.

Discussion

- What you learned from the pilot run? What you might change for the "real" experiment? what you might change in your interface from these results alone? If you'd like, you may include results and assumptions from other group members' tests here as well.

As is expected, the testee breezed through the tutorial without reading much of the text. However, to familiarize oneself with the interface and the game features requires reading the text in various stages of the tutorial. Also, to learn the level objectives one needs to carefully read and understand the text displayed before each level. It would seem that the understanding of the game depends on reading the text. That would indicate that the interface is not intuitive enough, and that the goals need to be carefully explained and understood rather than beg for automatic user action.

A possible solution to having the game overloaded with monotone text is by making nice cartoon-ish graphics in the background. For example, the DJ character might be rocking left-right to the tune of the beat, or he might be literally making a transition from one vinyl to another on the turn-table in front of him. Either way, there needs to be less text and more instantly recognizable visual feedback. An alternative to adding heavy graphics (since we are not professional flash developers) we might bullet the important text parts, instead of making it seem like an academic paper.

As far as the scoring system goes, in order to explain the error that the user made, we need to have a dialog-box pop-up with a delay of a few seconds saying something like “transition-made off-beat”, where the text depends on the particular objective being in question.

For the objective clarification in-game, instead of simply indexing the objectives by number, we could display the next objective to be completed (e.g. “make a transition on-beat”).

There were some rule-based mistakes made, due to the visual appearance of some parts of our interface. We would address those by making those interface objects look like their purpose, for example a button if it is clickable, or like a label if not clickable.

Also, to make the power-up acquisition and in-game resource terminology more intuitive (even though guitar-hero doesn’t), we might have a countdown of tasks needed to be performed until the power-up is activated. Also, the text of the power-up might be different, like “Double Points” instead of “x2 Points”. The “x2” might be understood as “second level”. For example, in the very beginning of the level, we might have a label somewhere saying “3 on-beat transitions till x2 points Multiplier”, but presented in a nice concise way that allows the user not to be distracted form the main purpose of the game.

To minimize the learning curve and maximize retention rate of the game functions, we might introduce a “summary of actions” or “hints” table accessible from the welcome screen. That way, the user will not need to go through the tutorial should he not remember how to do something.

Appendices

Materials:

- (all things you read --- demo script, instructions -- or handed to the participant -- task instructions)

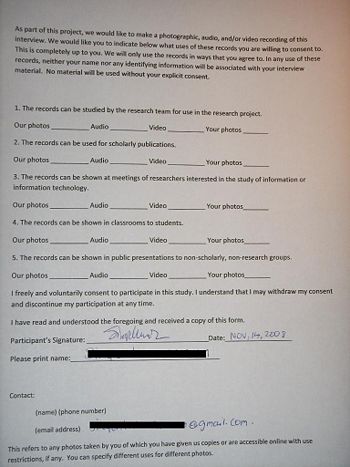

Signed consent form:

The following is an outline of the script I used for the usability study of Orquesta game:

- Read and sign agreement

- Make sure she understood it

- Thanks for agreeing to do this study

- Why am I doing this

- Part of a class project

- We want to have a design that is intuitive and easy to use

- YOU are key to making it happen!

- Background on the application

- Serious game

- We want to make it fun!

- How this usability test will function

- This is not a test of you, it is a test of US - our design is being tested by you

- I will give a list of tasks for you to accomplish

- I am looking for your evaluation of the game-play experience

- I might ask questions about specific buttons, actions, or experiences

- To get feedback on specific areas / actions

- I want to take pictures - is that ok? (No it wasn’t)

- How to act during the test

- Encourage her to give feedback in real-time

- Feel free to ask questions, although I might not be able to answer all of them

- Can't answer ones about how to accomplish tasks

- Not to be mean, simply because I want to know if it isn't easy

- I want you to be critical, we need to know how to improve

- If you see things you like, we want to know about those too!

- She will have a chance to give more feedback at the end of each task

Raw Data:

- (i.e., entire merged critical incident logs)

NOTE: Critical Incidents are unexpected events that exceed the normal coping of an individual. By definition, those are to be measured during the time the task is being performed, hence the “During-task” section is the only one that contains them. Other sub-sections of this section contain "support" incidents and evaluations.

TASK: Complete Tutorial

- during-task:

- why does it fail

- is there a meaning to the vinyl figured it out after a few moments

- is there a game objective associated with volume

- unclear whether to click on the button inside the bubble or in the screen

- not sure what vinyl means, thinks it is clickable, purpose decoration

- unclear if points are awarded by clicking the knobs below the volume, or the volume toggle itself.

- post-task:

- guess purpose of the game: (about tone, music)

- tutorial too much text, too confusing

TASK: Obtain Power-up

- pre-task:

- confused what is campaign mode "can i go practice"

- too much text

- not clear what is campaign mode from welcome screen.

- during-task:

- not clear why vinyl broke, with the time pressure, not noticeable that it broke

- why is it quiet - did something wrong

- unclear what transition mean- guess is to continue the track when it stops, guess that the purpose is different in this game

- didn't hear the track stop when she clicked (wrong area of clicking), didn't hear anything different

- unclear what on-beat means

- post-task:

- unclear why upon press the music didn't play (she pressed off-beat)

- not clear how the points are acquired

- not clear why points are doubled

- too much information on results screen

- unclear why would you fail or pass - unclear on the purpose of the results screen, how does it tie in with the next level

- (x2)time pressure factor in game experience

TASK: Complete Level 1

- pre-task:

- not sure what is meant by power-up (guess is to finish the game (guess #1) or successful transition (guess #2) guess that it is a musical term)

- after about 10 seconds clear how to get a power-up

- not associating points with power-up

- "2x points" means 100 points or double your already acquired.

- power-up terminology unclear (guess #1 start the game - start the car, you "power UP")

- text not read carefully -> no way to understand the power-up

- during-task:

- not clear why the glow keeps pulsating on all three as opposed only the one is playing

- not clear why vinyl brakes (x2)

- clear why DJ-power is active

- clear why the instrument tracks are not playing

- post task:

- guess that purpose the game is to match some sound

- power-up & transition unclear

- game-play logic unclear

- volume toggle clear

- would like some suggestion instructions (maybe form of pop-up)

- understand correctly the purpose of vinyls

- objectives listed instead of each one of them stated